John O. Campbell

Since its beginnings, western science has evolved a reductionist

vision, one explaining more complex phenomena as compositions of simpler

parts, and one that is amazingly good at describing and explaining most

entities found in the universe. Wikipedia provides a commonly accepted

definition of reductionism (1):

Reductionism is

any of several related philosophical ideas regarding the associations

between phenomena, which can be described in terms of other simpler or

more fundamental phenomena. It is also described as an intellectual and

philosophical position that interprets a complex system as the sum of

its parts.

One reductionist triumph

is the field of statistical mechanics which describes the behaviour of

large-scale materials completely in terms of the behaviour of individual

atoms or molecules.

But in recent decades reductionism has fallen out of favour, especially in the public imagination, due to a desire for more wholistic (sic) explanations. Richard Dawkins writes (2):

If you read trendy intellectual magazines, you may have noticed that ‘reductionism’ is one of those things, like sin, that is only mentioned by people who are against it. To call oneself a reductionist will sound, in some circles, a bit like admitting to eating babies.

This popular rejection may partially reflect rejection of scientific explanations in general, but for several decades thoughtful scientists have been adding their voices, offering serious criticisms that are difficult to refute (3; 4; 5). Still, most scientists continue to prefer some version of the reductionist explanation. Richard Dawkins offers a particularly spirited defence of what he calls hierarchical reductionism (2):

The hierarchical reductionist, on the other hand, explains a complex entity at any particular level in the hierarchy of organization, in terms of entities only one level down the hierarchy; entities which, themselves, are likely to be complex enough to need further reducing to their own component parts; and so on.

Here we explore this controversy. It is perhaps an inevitable controversy, one sure to grow into a scientific revolution due to new additions recently discovered to the small number of fundamental entities at the bottom of the reductionist hierarchy. These new, revolutionary discoveries are information and knowledge. Traditionally reductionist explanations have been ultimately cast in terms of mass, energy and forces, but once information enters the mix, as an equivalent form of energy and mass, all theories are ripe for transformative reinterpretation, interpretations explaining how information acting together with energy and mass shapes all things at the most basic level (5; 6). It is as if scientific explanations developed since Newton and Descartes must now be supplemented with an additional dimension, an informational dimension.

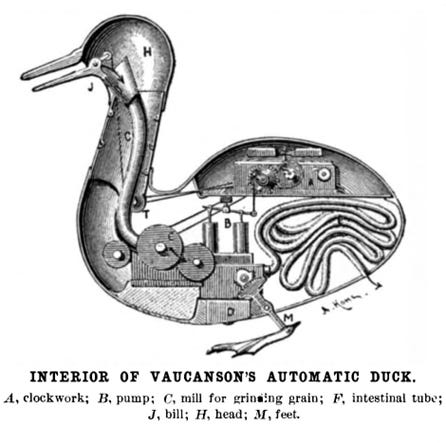

Figure 1: Descartes proposed that animals were essentially machines, machines that should be understood in terms of the workings of their part.

Reductionism itself is a victim of this kind of revolutionary transformation. Once reductionism expands to encompass the fundamental substance of information, it is no longer reductionism but transforms into something more complete – a two-way inferential process between levels, not only drawing, in a reductionist manner, on lower physical levels of mass and energy but also on information and knowledge existing at a higher level.

And the discovery of information may have arrived just in time to save reductionism from a severe, possibly fatal problem. It is the problem of the evolutionary origins of order and complexity, phenomenon that have never had satisfactory reductionist explanations (3). Energy, mass, and forces, on their own, tend towards equilibrium; undirected they cannot create order or complexity. Order and complexity may only arise if the equations describing mass, energy and forces are subject to extremely fine tuned initial or boundary conditions. Biology, for example, explains the growth of biological complexity as due to natural selection which accumulates and refines the attempts of generations of phenotypes to maintain themselves within existence and encodes the more successful experiences within DNA. Thus the life of each new individual in each new generation is guided by its (epi) genetics, the finely tuned initial and boundary conditions accumulated by former generations in the tree of life. And this implies that life is not a reductionist process, its guiding knowledge is not due to the uninformed chemistry and physics of lower levels; life occurs when these lower levels are guided and informed by knowledge gathered over evolutionary time, and this knowledge exists at a higher level than uninformed physics and chemistry.

This view of the origin of order, answers the question ‘Where did all the knowledge, in the form of fine-tuned initial conditions come from?’. Reductionism cannot answer this. Any attempt to explain life in terms of only mass, energy, and forces, is already at the bottom of the hierarchy and cannot account for these special conditions, in terms of any lower level. These conditions contain knowledge (e.g., (epi) genetic knowledge [1]) and the origins of that knowledge demands an explanation. Reductionism posits linear, bottom-up causation but can offer no explanation of how the knowledge to create ever more complex entities, a trend away from equilibrium, is encoded into the most fundamental of physical building blocks. It is linear and offers no opportunities for feedback or other sources of control or regulation required for the existence of complex entities.

All knowledge is essentially evidence-based knowledge that accumulates through the process of (Bayesian) inference. Abstractly, inference is an interplay between two levels, the level of a model or family of hypotheses and the level of evidence spawned by those models. It is a cyclical process that is inherently non-reductionist.

Clearly, one-way causation is insufficient to explain the existence of complex entities and better explanations must involve some form of two-way causation where the casual forces of the building blocks are orchestrated and regulated by knowledge residing at a higher level in the hierarchy. Our first clue to an answer is this: the process is a two-way street involving a cyclical structure.

We are quite familiar with the conditions that impose order on complex entities such as organisms – they are the conditions set by the (epi) genome and this source of regulation is not spontaneously encoded at the more fundamental level of atoms but exists at the same level as biology itself. It is ironic, that Dawkins, the champion of gene-centred biology, seems to have missed this point. As a process of two-way causation, we might view knowledge encoded in the (epi) genome as structuring, orchestrating, and selecting chemical and physical potentials to form the phenotype – the upward causation of chemical potential is regulated by downward genetic control. We may view the developmental process of organisms in terms of an (epi) genetic model that is followed as faithfully as possible to produce the phenotype.

But, in general, where did the model knowledge come from? In some cases, such a biology, we know quite well. Darwin presented heredity, or the passing of knowledge between generations, as a conceptual pillar in his theory of natural selection. And the on-going micro-biological revolution demonstrates that much of that knowledge is encoded within DNA. In biology, (epi) genetic knowledge is accumulated by natural selection which is, in general terms, a Bayesian process, one in which evidence of what worked best in the last generation inferentially updates the current genomic models to greater adaptedness (7).

This biological example can be abstracted into a two-step cyclical process in which the first step involves the transformation of an autopoietic model into a physical entity, and the second step involves the evidence provided by that physical entity’s quest to achieve existence, serving to update the model’s knowledge.

This describes an evolutionary process, with each cycle beginning with model knowledge acting to constrain the building blocks, explaining the formation of phenotypes under the influence of genotypes and, more generally, the formation of entities under the influence of information. And then to complete the cycle, the produced entity acts as evidence of what worked to update the model. With each cycle phenotypic adaptedness improves and this improvement updates the population level model for adaptedness encoded by genotype.

Arguably, with this tradition of including information and knowledge within its fundamental explanations, biology presaged the information revolution that would begin to diffuse through most branches of science in the latter half of the twentieth century. But even here reductionist biology has had an uneasy relationship with informational biology. We might argue that the (epi) genetic knowledge merely forms life’s initial and boundary conditions and that their workings are satisfactorily explained in terms of chemistry and physics. But this leaves unexplained the origin of these condition’s that encode knowledge. And an explanation cannot be found at a lower level of fundamental machinery; explanations are only found at the higher level of evolutionary biology.

Reductionist explanation, when forced to accommodate information and knowledge are extended beyond their possibilities and must transform into explanations that are no longer reductionist. This reductionwasism leaves an explanatory void, one that we will explore for hints of a way forward. But even at the start, with just the understanding that information and knowledge must play a large role in any explanation, we may detect a clear outline.

Prior to the information revolution, some researchers attempted, in a reductionist manner, to fil the biological explanatory void with some additional, vital force but by the 1930s that view had been almost completely excluded from the scientific literature (8) – biological processes were beginning to appear amenable to reductionist explanations in terms of only chemistry and physics. Nonetheless some scientists, including the leading biologist J.B.S. Haldane (1892-1964), insisted that biology could not be completely understood within a reductionist framework.

The applicability of reductionist explanations to life was clarified by the quantum physicist, Erwin Schrödinger (1887 – 1961), in his 1944 book What Is Life? (10). Schrödinger claimed that all biological processes could be explained in terms of chemical and physical masses and forces – along with derivative concepts such as energy which is the effect of a mass moved by a force. But he also acknowledged inherited biological knowledge allowing organisms to maintain a low entropy state in defiance of the second law of thermodynamics, even speculating that this knowledge might be stored in aperiodic crystals, four years before Shannon introduced the mathematical concept of information (9) and nine years before the discovery of DNA’s role in heredity.

Schrödinger thus began the formation of an emerging biological outline of biological processes as a synergy of reductionism guided by knowledge. While all biological processes can be explained purely in terms of physics and chemistry, these processes are orchestrated, regulated, or governed by knowledge stored in the (epi) genome selecting and specifying those processes.

One way in which this problem is currently framed is as downward versus upward causation, where downward causation is composed of information or evidence-based knowledge regulating the process of upward causation which plays out according to the laws of physics and chemistry. George Ellis (born 1939) uses the informative example of computation where, at the bottom level, the flow of electrons through the computer’s components are all well explained by physics in terms of upward causation but the orchestration and regulation of those electrons causing them to perform a useful computation is supplied by knowledge contained in the algorithm coding the computation in a form of downward causation imposed on the electron flow (3). While a computation can be explained as patterns of electron flow, the patterns performing the computation are specified by conditions imposed by the algorithm which directs it.

In this view it appears, in biology and computation at least, that two separate forms of causation are at work: one causing processes playing out as physics and chemistry and one orchestrating or specifying or selecting those processes from the multitude of possibilities. But any fundamental difference between these forms of causation also appears unlikely as even life’s DNA is chemistry governed by physical laws – the upward and downward perspectives on causality must share a fundamental unity.

To explore a possible form of this unity we first revisit the fundamental physical concepts forming the building blocks of reductionism: mass and force. Also of great importance to reductionist explanations is energy that is related to mass and force as energy is defined as mass moved by a force. In simple terms, upward causation occurs when forces move masses and change their energy. Thanks to Einstein we know that mass and energy are fundamentally equivalent and proportional to each other: e = mc2 - a little mass is equivalent to a lot of energy. And so, we might view upward causation as ultimately starting from a single building block of mass/energy and the forces it generates.

Can we view downward causation similarly? What would correspond to a force and what to mass or energy? The answer suggested here is that all three of these physical concepts basic to upward causation are equivalent to a single concept of downward causation: information. And thus, we may identify information as the missing vital force thought necessary for biological explanations in the nineteenth century.

This startling claim might gain plausibility if we consider Szilard’s equation showing the equivalence of energy and information; 1 bit of information = k T ln2 or about 10^-23 joules of energy - a little energy represents a lot of information. As the PhysicsWorld website recounts (10):

In fact, Szilárd formulated an equivalence between energy and information, calculating that kTln2 (or about 0.69 kT) is both the minimum amount of work needed to store one bit of binary information and the maximum that is liberated when this bit is erased, where k is Boltzmann’s constant and T is the temperature of the storage medium.

1 gram mass = 9 x 10^13 joules energy = 3 x 10^34 bits

As the quantity of computer memory existing in the world today is about 10^23 bits, 1 gram of mass represents a quantity of information roughly 3 x 10^11 times larger.

And it has since been experimentally confirmed that energy can be converted to information and vice versa (11). Thus mass, energy and information are equivalent forms transforming one into another and in recognition of this and other evidence, it may now be a consensus that information must join mass and energy as fundamental component of all existence (5; 6).

The inclusion of information along with mass and energy as different aspects or states of the basic substance composing reality has suggested a type of monism to some researchers (12). But this is a different type of monism than might be expected to emerge from a reductionist account because of the inclusion of information or knowledge, which we suggest acts as a force performing downwards causation, thus breaking the reductionist mould.

We might note that the concept of force is closely aligned with the fundamental reductive substance of mass/energy and ask if the addition of information to this fundamental substance is also intimately related to a force. This force cannot be one of the four fundamental forces acting in upward causation known to science, it must be something different. The answer suggested by Steven Frank and others is that information acts as both a substance and a force, a force acting in the process of inference, a force that moves probabilistic models away from the ignorance of the uniform distribution, where everything is equally likely, towards a more knowledgeable or spiked distribution denoting greater certainty and one essential to the accumulation of evidence-based knowledge (13).

An important derived concept in the reductionist framework is work or the movement of a mass by a force. For example, work is performed when the mass of a car moves due to the force provided by its burning fuel. We might ask if something analogous happens with the force of information, is it capable of moving a mass in a process we might call work? The answer, as demonstrated by Seven Frank, is yes (13).

This involves two different aspects of information the first as evidence and the second as information contained in the model. Evidence acts as a force and model information acts as the mass that is moved (keeping in mind that information in this sense is equivalent to mass). In this view the force of evidence in Bayesian inference moves the information of the model. The distance that a model describing hidden states is moved is called information gain and is the distance moved towards certainty that is forced by the evidence (19). We may name this form of work knowledge – the distance a model is moved by the force of evidence.

Frank offers the example of natural selection as the force of evidence moving the genomic model to perform work (13):

For example, consider natural selection as a force. The strength of natural selection multiplied by the distance moved by the population is the work accomplished by natural selection.

Having introduced information as both a basic substance and a force producing work we may now examine the implications this holds for reductionist philosophical interpretation of complex systems as the sum of their parts. A straightforward approach is to consider information and inferred knowledge as additional parts forming the sum, along with mass, energy and the physical forces employed by reductionist explanations. At a stroke this inclusion accommodates downward causation or knowledgeable regulation as a complement rather than as an alternative to upward causation. In this view, knowledgeable generative models may orchestrate or regulate the many possibilities offered by upward causation to form complex entities capable of existence.

Once this possibility is entertained, we find examples of blended downward and upward causation everywhere. For example, if a society wants to build a bridge across a gorge, we start by composing a knowledgeable generative model – a model that will generate the envisioned bridge. This engineering model specifies the required reductionist components, the masses to be used, the forces which must be balanced and the energies which must be applied. Once the engineering model is completed the construction begins by following as closely as possible the generative model’s instructions for placing and connecting the reductionist components. The generative model exercises downward causation through its orchestration and regulation of the reductionist components while the reductionist components perform upward causation by following the laws of physics and chemistry to which they are subject.

Another familiar example of synergy between downward and upward causation is found in biology where a knowledgeable generative model formed by the genome orchestrates and regulates the masses, forces and energies of reductive components involved in upward causation to form the phenotype. The proverb ‘mighty oaks from little acorns grow’ is usually taken to mean that great things have modest beginnings, but a deeper meaning might be that the acorn, given the right conditions, contains sufficient autopoietic knowledge to orchestrate physical and chemical processes into the creation and maintenance of a mighty oak tree.

We might note that these examples are illustrative of a general autopoietic process where the downward causation of generative models generates things into existence through the orchestration of upward causation - and are in complete accord with the free energy principle as developed by Karl Friston and colleagues (14). The free energy principle states that all systems act to minimize the surprise they experience where surprise is defined as the informational distance (KL divergence) between the expectations of their generative model and the actual generated thing – between what is expected and what is achieved. General processes implementing this surprise reduction strategy employ a generative model to specify and control an autopoietic or self-creating and self-maintaining process orchestrating the forces of lower-level building blocks to bestow existence on a generated thing (15). Through careful conformance to its model, the generated thing is more likely to turn out as specified and thus achieve minimum surprise.

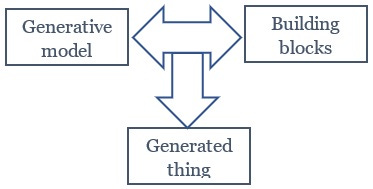

Figure 2: A general process of entity formation. Exercising downward causation, a knowledgeable generative model orchestrates and regulates mass and energy acting in upward causation through physical laws.

But this synergy between upward and downward causation still leaves much unexplained. As shown in figure 2, the process is linear and begs the question of both the origin of the generative model and the effect of the generated thing.

What is the origin of the generative model? In the example of biology above the answer is well known – the (epi) genetic generative model has accumulated its knowledge through natural selection acting over billions of years. And what is the effect of the generated thing? In biology the experience of the generated thing or phenotype in achieving an existence (reproductive success) composes the evidence updating the genetic model, at the population level, to greater existential knowledge.

In this light the generative model and the generated thing assume a cyclical rather than a linear relationship where the generative model sculpts the generated thing from the clay of its building blocks, and the generated thing updates the generative model to greater knowledge with the evidence of its existential success

Figure 3

I have referred to systems that achieve and maintain their existence through this two-step process as inferential systems (16). In these systems upward causation is in terms of the building blocks’ physical and chemical potentials. And downward causation is orchestrated by the generative model during the autopoietic step of the process. Thus, the inclusion of information and knowledge as fundamental physical concepts forces an expansion of the reductionist framework to one that can no longer be called reductionist because it relies upon knowledge at a higher level to supplement fundamental processes at a lower level.

New to this paradigm is its second step where autopoietic success is used as evidence to update the model that generates it. Natural selection serves as an example of this model updating as it is a process in which the genetic model is updated with the evidence of the phenotypes it brings into existence (17; 18; 16). The evidence that updates the genetic model is the evidence of fitness in the form of reproductive success. With each generation, the population level genome forms a probabilistic model composed of genetic alleles, with each allele assigned the probability of its relative frequency within the population, and in each generation these probabilities are updated, in a Bayesian manner, due to the evidence of fitness they produce.

Evidence is information which acts as a force in Bayesian inference – it moves the probabilistic model to a new shape containing greater existential knowledge. Crucially all evidence is evidence of existence; to qualify as evidence, it must have an actual existence. And in turn, this means that evidence can only have relevance for and can only update models that hypothesize about possible forms of existence.

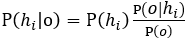

As we have seen, information acts as a force in the process of Bayesian inference where the degree of plausibility granted to each hypothesis composing a model is updated by information in the form of evidence updating the prior distribution into a posterior probability;

Where hi is the ith hypothesis, in a family of exhaustive and mutually exclusive hypotheses describing some event, forming a model of that event, and 0 is a new observation or evidence. A prior probability, is transformed into a posterior probability, . The term describing the force doing the heavy lifting in moving the prior to the posterior is the likelihood, describing the informational force of the current observation (13).

Reductive explanations, even Dawkin’s hierarchical reductionism, must ultimately explain phenomena in terms of fundamental physical forces, energies, and masses. For example, Dawkins’ reductionist example of a car describes it in terms of its parts such as its carburettor. But a fundamental explanation must progress down this hierarchy ultimately to an explanation in terms of atoms or subatomic constituents and their abstract description in terms of forces, energies, and masses.

We have suggested a transformation of reductive explanations required by the addition of information and knowledge to nature’s small repertoire of fundamental components. Reductionist explanations on their own have brought us a great deal of understanding but they struggle to explain why, out of the nearly infinite possible configurations of mass, energy, and forces, only a few complex forms such as life have achieved an existence. Reductionist explanations for complexity depends on special initial and boundary conditions, and they can offer no explanation for the knowledge contained in these conditions which beget complexity (20).

One effect of this reductionist deficiency is that it precludes a unified understanding of natural processes. Given the laws of nature and the operative initial and boundary conditions, reductionism can explain a specific system’s further evolution. But the conditions orchestrating each type of system appear quite different. It appears that atoms operate differently then cells, cells differently then brains and brains differently then cultures.

The explanatory void left in the wake of reductionwasism may be remedied by the introduction of information and knowledge as fundamental entities. At a stroke these offer a solution to the problem of initial and boundary conditions in that the knowledgeable conditions orchestrating complexity evolve from the evidence generated by their actions (16). As illustrated in figure 3, this may lead to an evolutionary process of knowledge accumulation forming the initial and boundary conditions for each generation. And as demonstrated in various lines of emerging research, such as the free energy principle, the inferential and evolutionary accumulation of knowledge and its testing is a common mechanism of all existing things. And this makes the universe much more easily knowable – if you know a little about inferential processes, you know a little about everything.

References

1. Wikipedia. Reductionism. Wikipedia. [Online] [Cited: July 13, 2022.] https://en.wikipedia.org/wiki/Reductionism.

2. Dawkins, Richard. The blind watchmaker. s.l. : Oxford University Press, 1976. ISBN 0-19-286092-5..

3. How Downwards Causation Occurs in Digital Computers. Ellis, George. s.l. : Foundations of Physics, , 2019, Vols. 49(11), 1253-1277 (2019).

4. Ulanowicz), R.E. Ecology: The Ascendant Perspective. s.l. : Columbia University Press , 1997. ISBN 0-231-10828-1.

5. Developmental Ascendency: From Bottom-up to Top-down Control. . Coffman, James. s.l. : Biological Theory., 2006 , Vols. 1. 165-178. 10.1162/biot.2006.1.2.165. .

6. Information in the holographic universe. Bekenstein, Jacob. August 2003, Scientific American.

7. Information, Physics, Quantum: The Search for Links. In _Proceedings III International Symposium on Foundations of Quantum Mechanics_. Tokyo: pp. 354-358. Wheeler, John Archibald. Tokyo: : (1989). Information, Physics, Quantum: The Search for Links. In _Proceedings III International Symposium on Foundations of Quantum Mechanics_, 1989. pp. pp. 354-358.

8. Universal Darwinism as a process of Bayesian inference. Campbell, John O. s.l. : Front. Syst. Neurosci., 2016, System Neuroscience. doi: 10.3389/fnsys.2016.00049.

9. Wikipedia. Vitalism. [Online] 2013. [Cited: November 10, 2013.] http://en.wikipedia.org/wiki/Vitalism.

10. Schrödinger, Erwin. What is life? s.l. : Cambridge University Press, 1944. ISBN 0-521-42708-8.

11. A mathematical theory of communications. Shannon, Claude. 1948, Bell System Technical Journal.

12. Cartlidge, Edwin. Information converted to energy. physicsworld. [Online] 2010. [Cited: May 31, 2022.] https://physicsworld.com/a/information-converted-to-energy/#:~:text=In%20fact%2C%20Szil%C3%A1rd%20formulated%20an,is%20the%20temperature%20of%20the.

13. Experimental demonstration of information-to-energy conversion and validation of the generalized Jarzynski equality. Toyabe, Shoichi , et al. s.l. : Nature Phys , 2010, Vols. 6, 988–992 (2010). . https://doi.org/10.1038/nphys1821.

14. Sentience and the Origins of Consciousness: From Cartesian Duality to Markovian Monism. Friston, Karl J., Wiese, Wanja and Hobson, J. Allan. s.l. : Entropy, 2020, Vol. 22. doi:10.3390/e22050516.

15. Simple unity among the fundamental equations of science. Frank, Steven A. s.l. : arXiv preprint, 2019.

16. Free Energy, Value, and Attractors. Friston, Karl and Ping Ao. s.l. : Comp. Math. Methods in Medicine, 2012.

17. Campbell, John O. The Knowing Univese. s.l. : Createspace, 2021. ISBN-13 : 979-8538325535.

18. —. Darwin does physics. s.l. : CreateSpace, 2015.

19. Active inference and epistemic value. . Friston K, Rigoli F, Ognibene D, Mathys C, Fitzgerald T, Pezzulo G. s.l. : Cogn Neurosci. , 2015, Vols. 6(4):187-214. . doi: 10.1080/17588928.2015.1020053..

20. Temporal Naturalism. Smolin, Lee. s.l. : http://arxiv.org/abs/1310.8539, 2013, Preprint.

21. Laplace, Pierre-Simon. A philosophical essay on probabilities. [trans.] Frederick Wilson Truscott and Frederick Lincoln Emory. New York : JOHN WILEY & SONS, 1902.

[1] In the sense that knowledge encoded within DNA is the brains of a process essential to the creation and smooth running of living things – making genetics the custodian of knowledge forming the initial and boundary conditions.